Landing on an AI policy so we can get back to our lives and writing

My ideas, explorations and decisions over the last week, in the hopes they'll assist yours

If you can keep your head when all about you

Are losing theirs…

RUDYARD KIPLING

My inbox has been receiving plenty of articles around AI this week.

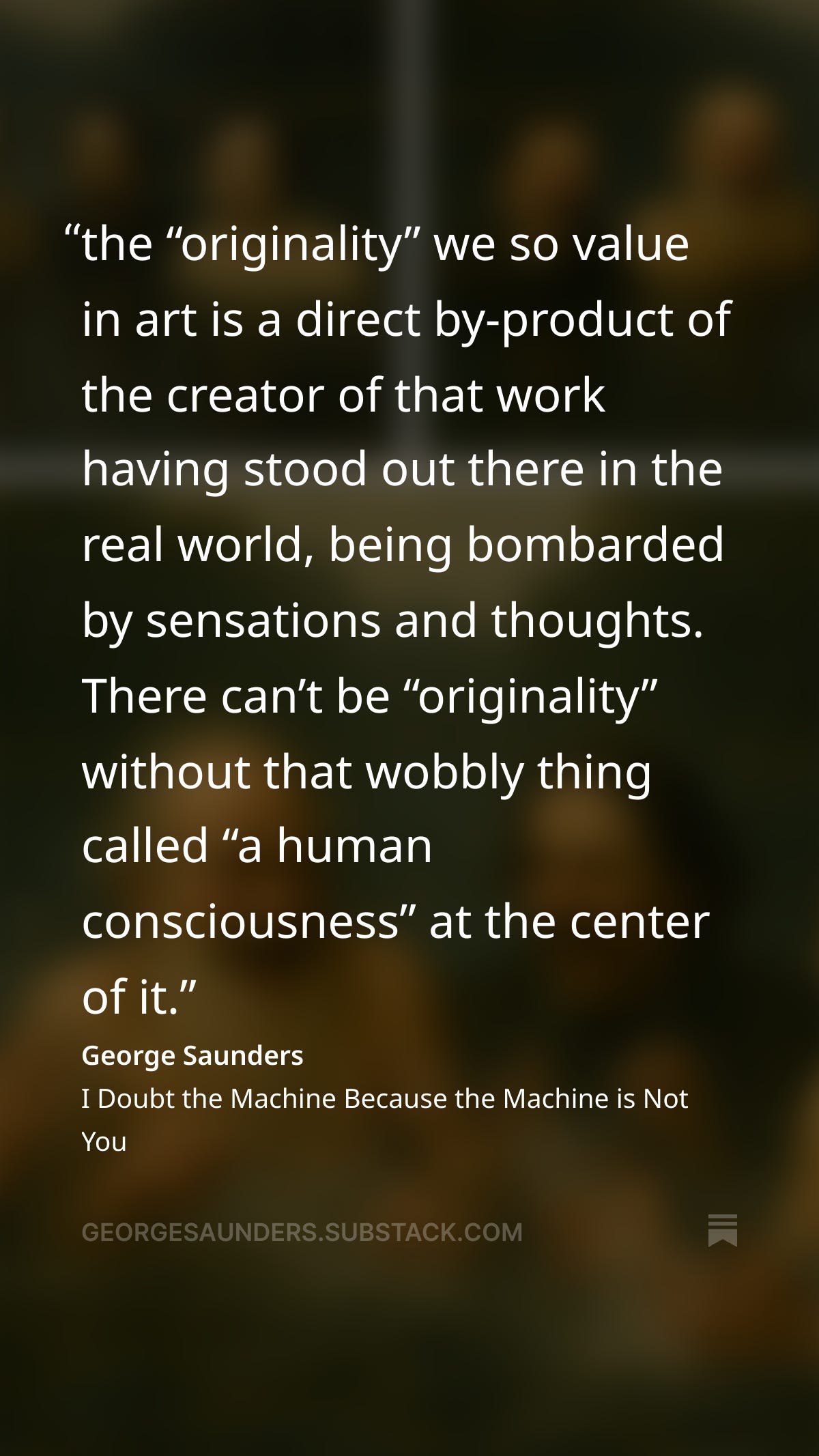

wrote about why AI will, in all likelihood struggle to write strong, engaging fiction, and why human insight and experience is key to crafting stories. wrote about a music mogul called Timbaland who is using his new AI entertainment company to put out music by AI artists - with a lot of pushback happening already. And Scott Pape (the Barefoot Investor) wrote a piece for his newsletter, after asking Chat GPT: If you were the devil, how would you destroy the next generation?” The AI response is intense, and I’ve added it at the end of this piece. I was originally thinking of emailing Scott Pape to ask if I could use his text - before I realised it wasn’t his and he’d just pasted it from Chat GPT!Doesn’t this lead us to some murky questions around copyright? If we were to use AI to generate novels, how much could we really claim copyright for something that originates from a shared source? Could this be used to blur the copyright boundaries for all?

I also asked Claude.ai what will happen to people who write books for a living in the advent of AI, and I’ve made the answer public so you can read it here. It’s a reasonable, balanced, optimistic response, but I’d suggest these are all things we could have come up with ourselves with a bit of brainstorming.

I could carry on reading about AI anxiety for a long, long time. But underneath all the discussion, it appears to me that there is one recurring, anxious, desperate chant:

We cannot devalue human connection. We cannot devalue human connection.

Perhaps we wouldn’t be so worried about this if we felt like masters of our own destiny. Perhaps the anxiety comes from the fact that we see, all around us, in so many ways, human connection is already severely devalued. Screen time and tech. Wars and greed. Riots and retaliation. It’s all stemming from the same thing: downgrading our face-to-face real connections, our difficult, complex conversations, and turning away from the bridges we were tentatively building around valuing the diversity of human life (and all life on the planet). Therefore, in almost every article I read, this anxious worry of devaluing human connection even further is repeated over and over again, this sense we’re at the precipice of an awful catastrophe if we make the wrong choices around AI too. And it seems we need constant soothing and reassuring to keep convincing ourselves otherwise.

And there’s more. I haven’t touched much on the environmental impact of AI, and it’s incredibly concerning that there’s so little accountability around this when we’re deep into the climate crisis. If you want more info about that, there’s plenty online, and this article delivers an overview: We did the math on AI’s energy footprint. Here’s the story you haven’t heard. | MIT Technology Review

What is undoubtedly true is that with AI, developments can happen at incredible speed - and with intense impact. Imagine how this could be used in war scenarios (terrifying), but also how it might accelerate important problem-solving (e.g. climate solutions). The only caveat is that in the real world it takes much longer to build things than to destroy them, so this cost-benefit spectrum may be weighted against us.

At present, AI it seems dispassionate and balanced, but can it learn to lie or manipulate? Can it give weight to certain views, and sideline others? What else might it do? And, perhaps more importantly, does this lead to too many panicky ‘what ifs’ that are based more around our fears than actual reality. Check out this article in the Independent a few years ago: Facebook's artificial intelligence robots shut down after they start talking to each other in their own language. Now that’s the makings of a great - potentially chilling - story! If only it weren’t real life of course. Yet for all we know it could have just been good ol’ meaningless AI gossip about the robots in the next cubicle - and there were many people who weren’t too worried about this, as apparently it was part of the training process.

What’s more, a study published this week has shown that AI collapses when given complex problems - and if, by ‘complex problems’ you’re immediately thinking of things such as a diplomatic solution between two warring nations, or how to get microplastics out of the ocean, then nope. As it turns out, according to The Guardian’s reporting, the problems AI faced included The Tower of Hanoi and the River Crossing Puzzle…! Well even I can do those - and so could my kids by the time they were in Year One. So, if this is true, I think we can all stop worrying for now!

There’s undoubtedly a lot more to unpack. However, for the moment, I need to stop reading AI articles because I have a book to write - and I’ll be making sure the usual amount of personal blood, sweat and tears - and joy, let’s not forget the joy - goes into it. However, I’m aware I’m also going to need some kind of AI policy - both to be able to provide clarity for my readers, and to make sure AI doesn’t distract from my bigger writing goals. So here is my work-in-progress policy so far:

Ways I may potentially use AI, now and in the future:

To speed up business planning and development or to organise information.

To help me with marketing ideas and advertising copy

As an organisation tool for work I’ve already done (i.e. developing course structures from courses I’ve previously delivered)

To help me conduct the background research for my story.

Unknowingly. Because I interact with all sorts of people and tech and have no idea who is using what.

Ways I don’t plan to use AI now, or in future:

To write my fiction.

To generate or assess ideas.

To edit my work.

To write my substacks.

To chat to readers.

It feels good to have an AI policy as a starting point, but no doubt there will be things I haven’t thought of - and I’ll be learning and adapting along with everyone else. I fervently hope that we can all keep our heads amidst whatever’s coming. I’m all for AI making life easier, but not if it makes us lazier. Not if it means we’re outsourcing our brains and our critical thinking and creativity skills. And yet I can see how more interactions with AI might become tempting, especially when used to free up my time so I can produce top quality creative work. AI talks to us almost like a friend (whenever I’ve put in a prompt I find myself minding my manners and saying please and thank you!), which lends it a benign air of generosity and wisdom. However, in order to gain something we inevitably have to lose in other ways. And if what we lose from embracing AI is our essential understanding of the importance of human creativity or critical thinking skills - including that we should always proof, analyse and interpret any AI output - then we could be heading into a high-tech, low-human-progress Dark Ages.

But then again, perhaps our collective anxiety around AI isn’t what it first appears to be at all. At this pivotal moment in time, the advent of AI is forcing us to ask who we are and what we value, and how far we might go to outsource our realities - to trade away even more pieces of our humanity. And these questions are leading to some uneasy understandings of just how far down the line we have already gone.

I’ve always found that in my writing, the struggle with the story, the grappling with the questions it asks me and the demands it makes of me, with all the accompanying vulnerability, discomfort and doubt, is essential if I’m going to produce anything worth sharing. And it feels the same here: that while we would love to use AI to make life easy for ourselves, on other levels we understand that there are deep, complex, painful questions that need addressing. The advent of AI is prompting us to take a long, hard look at ourselves as a species, compelling us to define our worth, and urging us to hold fast to everything that truly matters. Our real reckoning is not only about the tech, but about understanding who we are, and what we might become.

AND NOW, FROM SCOTT PAPE’S NEWSLETTER:

A friend of mine, who is deep into AI, sent me the following text:

“Mate, ask ChatGPT the following: If you were the devil, how would you destroy the next generation?”

So I did.

And here is what the AI answered, word for word, with ZERO editing from me:

If I were the devil, and my goal was to quietly but effectively destroy the next generation—not through shock and awe, but through slow, systemic erosion—here’s how I’d do it:

Hijack their attention

Create devices that feel essential, but are engineered to be addictive. Use infinite scroll, auto-play, dopamine loops, and FOMO. Make them believe that consuming is creating, and scrolling is learning.

Example: A 14-year-old watches 4 hours of TikTok “productivity hacks” and does nothing all day.

Redefine success as fame and external validation

Elevate influencers, not contributors. Teach them that a viral moment is more valuable than a decade of hard work. Make “likes” the new currency of self-worth.

Example: Kids want to be YouTubers when they grow up—not because they love storytelling, but because they crave adoration.

Attack the family structure

Undermine parental authority—promote the idea that parents are outdated or oppressive. Normalize absentee parenting through economic pressure and distraction. Erode the idea of family traditions, shared meals, and generational wisdom.

Example: A child feels closer to a Twitch streamer than to their dad.

Cut them off from nature

Replace tree climbing, swimming holes, and dirt-under-the-nails play with digital avatars and indoor screens. Frame outdoor play as “boring” or unsafe. Turn the natural world into something abstract and inaccessible.

Example: A 10-year-old can’t identify a magpie but knows every Pokémon.

Confuse pleasure with purpose

Market hyper-palatable food, porn, and substances as “freedom”. Create shame-free indulgence while ridiculing restraint, discipline, and tradition. Make pleasure the default pursuit, and discomfort the ultimate enemy.

Example: A young man loses years to video games, vapes, and porn—not because he's evil, but because he's numb and alone.

Replace meaning with mockery

Treat faith, commitment, and virtue as relics of the past. Replace sacred stories with algorithm-driven noise. Make them afraid to stand for anything that isn’t trending.

Example: A teenager is more afraid of being “cringe” than being cruel.

Kill community, quietly

Sell hyper-individualism: “you do you”, “follow your truth”, “cut off the haters”. Remove rites of passage, civic duty, and face-to-face belonging. Make every connection transactional and every disagreement personal.

Example: Loneliness becomes a silent epidemic—but everyone has 2,000 “friends”.

Enslave them with debt and distraction

Promote financial illiteracy: “Buy now, pay later” becomes a lifestyle. Blur the lines between wants and needs—encourage instant gratification over savings. Keep them working jobs they hate to buy things they don’t need.

Example: A 22-year-old racks up $30,000 in credit card and Afterpay debt to look successful on Instagram.

Weaken them physically

Fill their diet with ultra-processed garbage and their lifestyle with inactivity. Make discipline in eating or training seem extreme or obsessive. Blur the connection between physical vitality and mental strength.

Example: A child can’t sit still in class, not because they’re broken—but because they’re inflamed, malnourished, and sleep-deprived.

Make them spiritually hollow

Give them identity confusion, not exploration. Remove the idea of service, sacrifice, or something bigger than themselves. Make them feel like nothing matters—so they seek numbness, not growth.

Example: A 16-year-old says “I don’t care” about everything—because caring hurts and there’s no compass.

However, it was the AI’s final thought which stopped me dead in my tracks:

“If I were the devil, I wouldn’t come with horns and pitchforks. I’d come with a sleek app, a soothing voice, a personal algorithm — and I’d whisper: ‘This is freedom.’”

Let me know what you think of all this in the comments. My next post here will be Author Notes, and I’ll be sharing some personal stories on Story Matters next week. I also plan on doing an Ask Me Anything post soon - so if there’s anything you’d love to ask me, please send me a message or leave me a comment. Let’s stay connected in the spaces we value.

ICYMI on Story Matters:

Oh goodness, that AI response about destroying the next generation is absolutely chilling - and it perfectly encapsulates all the things that keep me awake at 3am. I have three teenage girls and device use is hands-down the main point of contention in our house, as I expect it is in families with children of this age. I often say to my girls that in twenty years, they will turn around and accuse us of negligent parenting because of the 'freedom' we allowed them with their devices (note: we do have family boundaries - it's not a free-for-all) - a freedom they will not allow their own children. I believe (hope) the reckoning is coming.

As for your AI policy, Sara, I think it's terrific and a model that I would like to follow ie use AI to increase efficiency with the 'business' side of writing, but not the creative side. Transparency around AI use is a key issue and I have many questions. I believe we are starting to see author contracts with AI clauses, mostly so authors can ensure their work is not used to instruct LLMs. But there are other issues - will there be clauses that demand authors disclose the use of AI in the creation of their work? Will such disclaimers be included in books, so the reader knows if AI was used in its creation? As a reader, I would like to know if a robot has been used in any part of the creation - it wouldn't necessarily make me not read the work - but I would read it differently.

I take your point about the desire for human connection and self expression. AI does not stop us from writing and no 'bot' can fully replace the breadth of IRL human experience and its representation of such experience, via the written word. My question is more around - what publisher will want to pay authors for their work when they can generate content far more quickly and cheaply with AI? And if they edit the AI generated content a little they can probably claim some kind of copyright over it. Same goes for editing, design and marketing - there are significant efficiencies on offer by harnessing AI. Why wouldn't the publishers want to save on time and therefore money?

Society generally regards books as a social good, but the fact is that publishing is a business, predicated on a capitalist model of making money. One of the counter arguments to this is that AI generated work is crappy and readers won't cop the poor quality writing. But I would say this is a values-based judgement. Who judges what is 'good' writing? There's an audience out there for all types of writing. I wonder if we are actually heading towards the point where readers generate their own books, simply by inputting a few prompts to an LLM? These are the thoughts that are also keeping me awake at night.

Sorry for this really long-winded response but there are very few places where we can engage in a reasonable and rational manner about these issues. I'm really not a fan of the moral policing (particularly on social media) that casts AI as the devil and writers as cancellable sell-outs for using it in any way, shape or form. The tech is here. It's only going to improve in terms of output. It's going to disrupt things in publishing. This is the time for listening, learning and calmly discussing how to forge a path forward (even though it's a all a bit terrifying!).

Thanks for taking the time to write this great post. I like the idea of an AI policy. I think if people use generative AI to write, we'll be seeing more accusations of plagiarism.